The idea of AI revolutionizing software testing is captivating. The hype tells many magical & heroic tales where automated systems can run without oversight, predict bugs before they occur, and even write test cases on their own. But the daily grind of testing reveals a more complex reality! As someone who’s navigated the possibilities and limits of AI tools, I’m here to offer a grounded view😮💨.

We’ll explore where AI genuinely enhances testing workflows in 2024, where it falls short, and what testers need to know to get the most out of these evolving technologies.

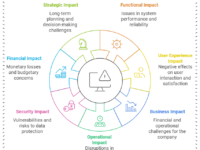

Mind Map of AI’s Role in Software Testing 🧠

A mind map can help to visualize where AI stands now and where it’s likely headed in software testing.

AI in Testing: What’s Real and What’s Not 📊

To understand AI’s place in testing, it’s crucial to separate achievable uses from the exaggerated claims:

| AI Application | Hype | Reality |

|---|---|---|

| Test Creation & Execution | Full automation without human input | Requires guidance, fails with complex logic |

| Bug Prediction | AI predicts all potential bugs | Basic pattern recognition, lacks human intuition |

| Test Case Optimization | Automates and refines test cases autonomously | Effective for repetitive test scenarios |

| Error Categorization | Classifies bugs with human-like accuracy | Useful for sorting basic bug types |

| Maintenance-Free Automation | Self-updating scripts that adapt to changes | Some automated updates, but requires regular review |

The Practical Side of AI in Software Testing 🔍

1. Test Case Optimization

In fast-paced environments, AI’s strength lies in optimizing test cases by identifying redundant scenarios or selecting tests based on historical patterns. This allows teams to run the most valuable tests first, reducing overall test cycles without sacrificing coverage.

- Example: Companies like Netflix use AI-driven tools to prioritize tests, focusing on the most impactful ones for faster releases.

- Tip: Integrating AI in your CI/CD pipeline can streamline regression testing, but keep an eye on performance to avoid unintended bottlenecks.

2. Risk-Based Testing and Prioritization

AI helps us focus on critical areas by analyzing historical bugs, code complexity, and change frequency, highlighting areas most likely to introduce new issues. This insight is especially helpful when tight deadlines prevent exhaustive testing.

- Example: RiskLens provides AI-based risk analysis to guide testers on high-risk modules.

- Insight: AI improves risk-based testing, but it doesn’t eliminate the need for human intuition. After all, no algorithm can fully replace a tester’s sense for areas of high-impact risk.

“Risk management in testing isn’t about testing everything; it’s about testing the most critical things.” — James Bach

3. Bug Triage and Categorization

AI’s clustering algorithms are excellent for sorting bugs, spotting duplicate issues, and categorizing them by similarity, which helps triage more efficiently. Teams dealing with large codebases or legacy systems often find this particularly helpful.

- Practical Bottleneck: Without clear data, categorization becomes inaccurate. For instance, if test results aren’t standardized, the AI model can generate confusing labels that lead to more work.

- Tip: Use an AI-driven defect management tool like Jira’s ML integrations to categorize and rank defects automatically.

The Overstated Promises of AI in Testing 🚫

While AI brings enhancements, here are a few areas where its capabilities don’t yet match the hype:

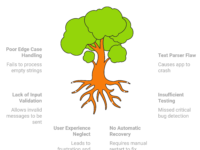

1. Autonomous Test Creation

The Hype: AI will eliminate the need for human-generated test cases by generating them autonomously.

Reality Check: Complex test cases often involve nuanced business logic, diverse user interactions, and unpredictable paths. AI requires massive amounts of clean, relevant data to replicate these paths meaningfully, which most teams cannot readily provide. Instead, testers must guide AI to create value within constraints.

2. Predicting Bugs Before They Happen

The Hype: AI can predict future bugs with accuracy, preventing issues before they’re written into the code.

Reality Check: Bug prediction models can identify risk patterns, but predicting the unknown is still a human skill. AI may flag specific types of issues based on historical data, but it cannot foresee unique bugs caused by new integrations or novel user scenarios. AI may provide probability metrics but requires human intervention to interpret them meaningfully.

“The role of AI in testing is not to replace the tester, but to assist in decision-making.” — Michael Bolton

3. Maintenance-Free Automation

The Hype: With AI, automation will no longer require maintenance as scripts automatically adapt to changes.

Reality Check: AI can detect broken test scripts or outdated selectors, but adapting these scripts to significant UI or functional changes is a complex task. Teams still need to dedicate time to maintenance to ensure stability, as tools are not yet sophisticated enough to re-engineer scripts entirely on their own.

Preparing for AI as a Tester 🌠

The key to thriving in this AI-driven landscape is to become a “context-driven AI tester.” Here are ways to incorporate AI’s strengths effectively:

- Invest in Continuous Learning: As AI advances, it’s vital to understand machine learning basics and how these models work. This knowledge empowers you to better leverage tools and interpret their results.

- Partner with Data Engineers: AI’s effectiveness grows with quality data. By working closely with data engineers, you can set up more effective data pipelines, enhancing AI’s performance in your projects.

- Integrate AI as a Helper, Not a Replacement: While AI can automate repetitive tasks and optimize processes, it’s best viewed as a tool to assist rather than a replacement for your judgment and experience.

The Future of AI in Testing: A Balanced Outlook 🎯

So, is AI in testing all hype or all hope? In reality, it’s somewhere in the middle. AI will not replace the human tester but rather enhance their capability by reducing mundane work, prioritizing critical test areas, and assisting in data-heavy processes.

For further reading, I recommend Michael Bolton’s classic, “Testing vs. Checking,” which emphasizes the irreplaceable value of human intuition and adaptability.

| AI’s Practical Role | Summary |

|---|---|

| Optimization | Streamlines repetitive or data-driven tasks. |

| Risk-Based Prioritization | Guides on high-risk areas for focused testing. |

| Bug Triage & Categorization | Groups and labels bugs, easing triage. |

| Test Maintenance | Highlights but does not resolve maintenance. |

So, in the I will say… 🎓

AI is here to stay, but it’s far from the ultimate testing solution some envision. Embracing AI means understanding its strengths, working with its limitations, and keeping human insight at the core. AI tools enhance the tester’s toolkit, but they don’t replace the careful observation, creativity, and judgment that only human testers provide.