When testing large-scale systems, especially in today’s microservices-heavy architecture, managing test data becomes one of the most overlooked challenges. You see, it’s easy to say, “Let’s create realistic test data sets.” But the real challenge is creating data that scales, remains consistent across services, and accurately reflects edge case scenarios. In this blog, I’ll walk you through the often frustrating—but essential—process of managing test data for large systems in 2024. We’ll also dive into those pesky edge cases that can throw even the most thorough testing strategy off balance.

Why Is Test Data So Tricky in Large Systems?

Let’s first start with a simple fact: large-scale systems involve a vast array of microservices, often spread across different teams, technologies, and locations. Each microservice might have its own database, data model, and communication protocols. Ensuring consistent data across these services for testing is no easy task.

On top of that, edge cases like data synchronization failures, stale data, and version mismatches are common and can lead to real-world issues if not properly tested.

James Bach once said, “Testing isn’t about trying to prove the software works. It’s about finding where it doesn’t.” This couldn’t be truer when it comes to test data management—because the software will fail when the data is off. But finding those scenarios requires careful planning and an adaptive approach.

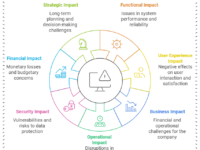

Key Challenges in Managing Test Data

Here’s the big picture: When you are handling data across a system with hundreds of microservices, some big headaches are waiting to happen.

✅ Data Consistency

Data consistency across services is a huge issue, especially when each service may be processing and updating the same entity (like a user profile or transaction) at different times. For example, if Service A updates the user’s profile, Service B may not immediately reflect that change due to latency or failed sync.

✅ Data Volume

In large systems, managing volumes of test data can be cumbersome. It’s not just about having a lot of data; it’s about having relevant data that will help you expose flaws and inefficiencies in the system.

✅ Synchronization Failures

Synchronization failures between services are more common than you’d think, particularly when network partitions occur. For instance, let’s say your system has a database split across regions. In the event of a network partition, part of your system might not have access to updated records, leading to stale data issues.

✅ Edge Case Complexity

Most of us in the testing field are familiar with basic edge cases like minimum/maximum values. But in large systems, edge cases can involve complex scenarios like multiple services competing to update the same piece of data, or latency spikes that cause temporary mismatches.

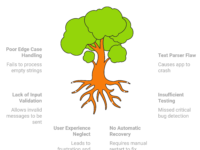

Mind Maps: Visualizing Test Data Management 💡

When I first started tackling this, I’d often find myself overwhelmed with how interconnected these systems are. That’s where mind maps became invaluable. Visualizing the flow of data through the system helps identify bottlenecks, failure points, and edge cases much faster.

Here’s a basic mind map outlining a test data management approach:

Using mind maps, you can quickly track how data flows, where it might break, and where to apply edge case testing. It’s a tool I recommend for everyone dealing with complex systems.

Example: Data Synchronization Failure in a Microservices System 📉

Let’s say you’re testing a financial application with several microservices—one that processes bank transactions, another that sends out transaction notifications, and another that logs transactions for reporting. Here’s a classic edge case:

- Service A processes a transaction and updates the database.

- Service B, which handles notifications, misses the update because of a network delay. As a result, the user doesn’t get notified of the transaction.

- Later, Service C logs an incorrect transaction because it was based on outdated information.

The root cause? A data synchronization failure caused by a short but impactful delay in the network. Imagine the chaos this would cause in a real-world banking app. Identifying and addressing this issue during testing requires both realistic data and edge case scenarios like network partitions and delayed syncs.

Practical Bottleneck Example: Handling Stale Data in APIs 🛑

Stale data is another headache that frequently arises, particularly when distributed databases are involved. Here’s a real-world example I’ve come across:

A global e-commerce platform maintains its inventory across several regions. When a customer makes a purchase in one region, it takes time for the central database to synchronize with the regional ones. This lag can create scenarios where customers see products listed as “available,” only to find out during checkout that they’re sold out.

To simulate this during testing:

- Generate test data representing real-time stock levels in each region.

- Introduce delays in synchronization, simulating real-world network issues.

- Measure the latency impact on the user experience during checkout.

This kind of test scenario not only tests your data pipelines but also reveals where your data synchronization mechanisms are vulnerable. Testing for stale data and sync delays can save the system from real-world disaster.

Strategies for Managing Test Data at Scale 🚀

So, how do we manage test data for these complex, distributed systems? Here’s a strategy that has worked for me:

✅ Use Realistic Data Mocks

Use tools like Mockaroo or Faker.js to create synthetic data that mimics real-world conditions. Don’t just fill your database with random data. Use test data that represents edge cases, like transactions that trigger synchronization or data refresh issues.

✅ Database Versioning

One way to avoid issues with data consistency across services is to implement database versioning. This helps to track when and where data was last updated, which is crucial for systems where multiple microservices update the same data.

✅ Data Resilience Mechanisms

Add circuit breakers to your services to handle network partitions and failures gracefully. Implement strategies like eventual consistency to ensure that services can recover from stale or missing data without crashing.

✅ Data Validation at API Level

A lot of issues arise from microservices passing incorrect or incomplete data. Introduce strict validation at the API level for all services to ensure that data is always in the expected format, and flag discrepancies before they propagate through the system.

A Critical Perspective on 2024 Trends 📅

Here’s where we need to get real. As of 2024, test data management tools are getting better, but they are still falling short when it comes to cross-service data consistency. Tools like TestDataBuilder are promising, but they struggle to handle edge cases at scale, particularly in microservices architecture. We need to push for more context-driven approaches in the tools we use. Standard solutions are no longer enough for the complexity we face.

Conclusion: Testing the System, Not Just the Code 🎯

To wrap things up, scalable test data management isn’t just about creating enough data; it’s about creating relevant, consistent data that stresses your system the way real users will. Edge cases, particularly those involving data synchronization failures and latency, are the real pain points in today’s microservices-heavy architectures. We need to approach testing with the mindset of exploring the system’s behavior under stress, not just its happy path.

By focusing on these strategies and building a mindset that embraces edge cases, you’ll avoid the pitfalls that come with incomplete data testing. Remember what Michael Bolton said: “Testing is about learning and exploring.” So go out there, explore the gaps in your data, and build systems that are resilient, not just functional.