Testing negative scenarios is an art form, especially in 2024, when data dependencies and intricacies in applications are only increasing. With data-driven applications in finance, healthcare, and e-commerce, it’s vital to address edge cases and push applications to their limits—particularly by exploring scenarios with missing relationships, incomplete transactions, or errant data subsets. So how do we effectively prepare our test data to meet these needs? Let’s dive into cloning and subsetting techniques for negative testing, along with some hard truths about their practical applications.

Why Cloning and Subsetting?

Cloning test data or replicating a set of data in a controlled environment, provides a secure playground for experimentation. By subsetting—taking only a part of this cloned data set—we create an environment focused on specific testing needs. Negative scenarios call for just this: precise, controlled sets where common and uncommon flaws in application logic and database relationships can be dissected.

✅ Cloning a full database allows us to simulate real-world conditions without endangering live data, especially useful in sensitive fields.

✅ Subsetting helps avoid overwhelming testing environments with excessive data, keeping tests focused and efficient.

“The details are not the details. They make the design.” — Charles Eames. In testing, ignoring edge cases means leaving doors open for unexpected failures.

When Cloning Isn’t Enough: Pain Points of Full Data Duplication

Cloning is excellent for broad testing, but for negative scenarios, it often leads to redundant tests and bloated data sets. Here’s where subsetting shines by honing in on specific combinations—missing fields, broken relationships, or boundary value subsets.

Pain Point 1: Database Bloat and Performance

Cloning without subsetting can cause significant delays in test execution. This problem isn’t new but has intensified as data sets grow in volume and complexity. Running an entire cloned set is not always practical; tests get delayed, and results become harder to interpret.

Pain Point 2: Masking Critical Negative Cases

Large datasets often mask critical edge cases, leaving common issues unnoticed. Suppose you’re testing a healthcare application where missing patient details in an emergency situation need to throw an alert. If our dataset is too broad, we may never uncover this critical scenario.

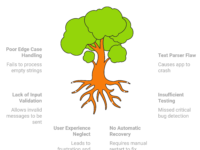

Visualizing the Process with a Mind Map 🧠

To understand the relationship between cloning and subsetting, consider a simple mind map:

Using mind maps can help us visualize where we’re headed, why certain subsets are required, and the intended outcome of each test subset.

Real-World Applications and Examples

Example 1: Missing Data Relationships in Financial Systems

A cloned financial database may include hundreds of transactions and account details. However, in reality, some data won’t align perfectly. Imagine a scenario where account records miss essential transactional data or contain only partial details. Here, a subset should specifically isolate incomplete transactions, allowing us to catch potential system breakdowns when the data is queried in unexpected conditions.

Example 2: Partial Data for E-commerce Orders

Suppose we’re testing an e-commerce application’s order system. Here, cloning an entire set of orders could be overwhelming, but a subset that focuses on orders missing address information, product details, or customer data can reveal how the system handles incomplete information.

Tips for Effective Cloning and Subsetting

Creating effective test subsets requires attention to detail. Here are some tips to keep your negative testing effective and lean:

- Automate the Cloning and Subsetting Process

Consider using scripts to clone and subset data. Tools like SQL, Python, or Apache JMeter can be utilized to automate cloning, and allow for conditions like missing primary keys or inconsistent foreign key constraints. - Focus on Edge Cases Only

Not all data is necessary. Create a rule that subsets only include records with specific inconsistencies (e.g., null values in non-nullable fields). - Simulate Different Data Scenarios

✅ Simulate scenarios like transaction rollbacks or partial data loads to observe system responses. - Audit and Clean the Cloned Data

Before using the data, ensure no hidden dependencies will skew test results. Data audits are especially crucial when subsets are derived from large, interdependent systems.

Tools and Techniques to Assist in Data Cloning

There are various tools out there, each suited for different environments and data types:

| Tool | Ideal For | Drawbacks |

|---|---|---|

| SQL Queries | Structured data subsetting | Limited flexibility in unstructured data |

| JMeter | Performance and load testing of cloned data | Less intuitive for non-developers |

| Mockaroo | Generating custom subsets with controlled data | Data might not mirror production data completely |

| Python Scripts | Custom logic to create subsets | Requires scripting knowledge |

A Quick SQL Snippet for Data Subsetting

Fetch orders with missing customer informationSELECT * FROM Orders

WHERE customer_id IS NULL

OR shipping_address IS NULL;

Using SQL to subset data for missing values and edge cases can give an instant view into where issues might arise.

Practical Bottlenecks in Cloning and Subsetting for Negative Testing

Despite the effectiveness of cloning and subsetting, practical bottlenecks still persist:

Bottleneck 1: Data Refresh and Synchronization

Cloning real-time data is challenging when databases are constantly updated. A cloned set from yesterday might already be outdated. Automating regular cloning is one workaround, but it adds complexity to the testing pipeline.

Bottleneck 2: Data Privacy and Compliance

In fields like finance or healthcare, cloning production data raises compliance issues. Data masking or anonymization is mandatory but introduces its own challenges, often masking real-world problems that negative tests seek to uncover.

Criticism: Are Cloning and Subsetting Sustainable for 2024?

While data cloning has been a backbone technique, many experts argue for real-time, on-demand data generation instead. Jonathan Bach once remarked, “Testing isn’t finding bugs, it’s about finding the absence of bugs where there should be some.” With data cloning, we risk relying on static data. Moving towards data simulation, rather than static cloning, is seen as a more sustainable approach in 2024 and beyond.

Pro Tip: Consider generating test data dynamically through a sandbox environment with seeded configurations to simulate real-world scenarios with greater variability.

Conclusion: Is Cloning Still Worth It?

As we evolve in 2024, cloning and subsetting aren’t the only options but remain powerful tools for testers committed to covering edge cases. However, considering the limitations and bottlenecks, testers should balance these methods with data generation and simulation tools. When done thoughtfully, cloned and subsetted data provides a foundation to test against the unknown, highlighting areas where the application may falter.

Further Reading:

Remember, the real power of cloning and subsetting isn’t in the process itself but in the insights it uncovers. Happy testing, and may your cloned data always reveal those hidden bugs!