Parameterized testing has become a go-to method for achieving most of the test coverage. Yet, like all methodologies, it’s not without its nuances. In this article, let’s explore how parameterized data generation can shape our approach to negative testing edge cases, while also looking critically at the limitations and bottlenecks of using it in today’s software testing landscape.

Understanding Parameterized Test Data in Negative Testing

Parameterized testing allows testers to drive multiple test cases using varied input data without rewriting individual test scripts. This is especially handy in negative testing, where the primary goal is to test for failure conditions—like invalid inputs, boundary cases, and unexpected values that might “break” the software. A well-parameterized negative testing setup offers the benefit of systematic and scalable test coverage.

But, as Jonathan Bach famously said, “Testing is about being curious and looking deeper.” This is crucial because parameterization can easily become a mechanical process that bypasses the deeper issues at hand, particularly in negative scenarios.

Why Parameterization is Ideal for Negative Testing Edge Cases

Edge cases are the outliers of expected behavior. They test the boundaries, ensuring that the system doesn’t crash under strange or abnormal conditions. Parameterized testing lets us automate various edge cases, such as:

✅ Invalid Inputs: Strings where numbers are expected, or special characters in fields designed for letters.

✅ Boundary Values: Extremes like zero, negative values, or maximum allowable limits.

✅ Null or Empty Inputs: Missing data that may cause crashes or unexpected behavior.

Techniques for Parameterizing Negative Test Data

Here’s a breakdown of how to structure parameterized test data for negative testing:

- Mind Map Your Edge Cases

Begin by creating a mind map. This visualization helps to break down every possible edge scenario based on factors like input type, length, or data constraints. You can map conditions such as valid, invalid, boundary, or null, then define possible variations for each. - Define Parameter Sets with Examples

For each input category, specify a list of parameters:- Integer Inputs: -1, 0, maximum integer, minimum integer.

- String Inputs: Empty string, extremely long string, strings with special characters like

!@#$%^&*(). - Null Values: Null or undefined inputs across all fields.

pytest.paramor Java’s JUnit@ParameterizedTestcan simplify this process by generating cases automatically. - Consider Custom Data Generators

Sometimes, we need more customized data than basic parameters. For instance:- Regex-Based Data Generation: Define a pattern to generate strings with random characters.

- Randomized Input Pools: Automate a pool of values that vary with each run for testing against unexpected behavior.

Practical Example of Parameterized Negative Testing

Let’s look at a practical example in Java using JUnit’s @ParameterizedTest.

import org.junit.jupiter.params.ParameterizedTest;

import org.junit.jupiter.params.provider.ValueSource;

import static org.junit.jupiter.api.Assertions.assertFalse;

class InputValidationTest {

@ParameterizedTest

@ValueSource(strings = { "", "123ABC", "##Invalid##", "verylongstringverylongstring..." })

void testInvalidInput(String input) {

assertFalse(ValidationUtils.isValid(input), "Input should be invalid");

}

}

In this example, we define a @ValueSource of invalid inputs for a username field that accepts only alphanumeric characters. The test automatically runs for each string in the list, asserting that the input is invalid.

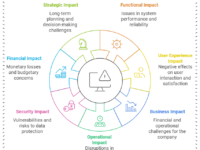

Critical Bottlenecks: When Parameterization Falls Short 🚫

While parameterized testing offers clear advantages, let’s be honest: it’s not a silver bullet for all negative testing scenarios. Here are some key pain points in 2024:

- Maintenance Complexity

As parameterized tests grow, managing and maintaining parameter sets becomes cumbersome. Every new edge case or invalid input requires an update, and if you’re using regex patterns or custom data generators, readability drops. This may lead to testers accidentally missing an essential edge case. - Data Explosion and Test Execution Time

Parameterization can lead to data explosion. Running tests with every possible combination can slow down test cycles, particularly in CI/CD pipelines. Modern solutions involve selective parameterization and limiting tests to critical combinations, but this requires manual analysis and, often, compromises test coverage. - Inadequate Context Coverage

Some edge cases require context-driven thinking and domain knowledge that parameterization can’t provide. “Negative testing is a mind game,” as Michael Bolton would say. You may need to understand unique system behaviors or interactions with other applications that parameterized inputs alone can’t capture.

Tools and Techniques for Efficient Parameterized Negative Testing

Here’s a look at a few tools that can help optimize parameterized negative testing:

| Tool | Feature | Example Use Case |

|---|---|---|

| Pytest | Data-driven testing with pytest.mark.parametrize | Testing boundary values in Python |

| JUnit | @ParameterizedTest for varied inputs | Null, empty, or special character cases in Java |

| WireMock | Mocking HTTP responses | Testing with unexpected API responses |

| JSON Schema Faker | Generates randomized JSON objects | Creating large datasets for invalid API payloads |

By using these tools, you can manage data-driven testing more efficiently. And combining these tools with proper data generators can help balance the complexity of maintaining parameter sets.

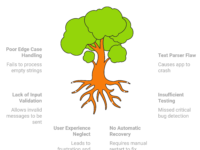

A Mind Map of Parameterized Testing Strategy for Negative Edge Cases

Here’s a visualization to structure your approach:

Summary of Parameterized Negative Testing Edge Cases

Documentation for Improvement: Document parameters and findings to refine testing strategies over time.

Focus on Edge Cases: Create exhaustive parameter sets to cover diverse edge cases.

Consistency and Integrity: Ensure consistent validation messages and data integrity post-failure.

Final Thoughts: The Limits of Parameterized Testing

Parameterized negative testing, while powerful, can become a crutch if used without deeper consideration. It’s tempting to think that by covering edge cases with parameterized inputs, we’re fully testing our system, but the reality is more complex. To quote James Bach, “Testing is exploring a system with the intent to reveal information,” which means we should continuously think about unique ways to challenge the system beyond data-driven limits.

To improve negative testing outcomes:

- Think Contextually: Don’t rely solely on automated data generation; use real-world insights.

- Combine with Exploratory Testing: Manually explore scenarios parameterized tests might miss.

- Optimize for CI/CD: Prioritize critical parameter combinations to avoid bottlenecks in CI/CD workflows.

Parameterized test data remains a valuable asset in negative testing edge cases, but as with any tool, balance is essential. Staying flexible, combining parameterized testing with exploratory methods, and leveraging context-driven insights will ensure a more resilient testing approach in 2024 and beyond.