Negative testing is often what distinguishes a secure, resilient software from one vulnerable to the unexpected. And if you’re trying to break your app to find all those hidden “what if” scenarios, then synthetic data generation is your best friend. But in 2024, generating reliable synthetic data for negative testing comes with its own set of challenges. Let’s talk about what synthetic data generation for negative testing looks like, its unique value, and some of the bumps on the road.

Why Synthetic Data for Negative Testing?

Negative testing pushes your application to its limits. By using synthetic data, you’re free to throw in data types, structures, or characters that would be nearly impossible to encounter in a real-world dataset. Using this approach, you can cover a range of scenarios and validate the robustness of your application. But let’s be clear – this process is anything but plug-and-play. It requires thoughtful setup and planning.

“Quality is free, but only to those who are willing to pay heavily for it.” — W. Edwards Deming

Key Areas in Negative Testing with Synthetic Data

Here’s a breakdown of common negative testing scenarios we aim to simulate with synthetic data, along with a few examples:

✅ Invalid Formats

Think of date formats. Instead of the standard “YYYY-MM-DD,” try something like “202310-31,” or “Oct_31st_2024”. This tests how well the system handles format anomalies and whether error handling kicks in gracefully.

yaml code# Sample Invalid Date JSON{

"start_date": "202310-31",

"end_date": "Oct_31st_2024"

}

✅ Boundary Values

Testing boundary values can expose handling issues that often go unnoticed. For instance, an age field might be set between 1 and 100, but what happens if you enter 0 or 101? Boundary values help expose such limits and whether they are enforced.

✅ Malformed JSON or XML

Malformed inputs can cause an application to behave unexpectedly. You might pass a half-written JSON with missing braces, or improperly nested XML. This helps uncover areas where the parser might break or produce erroneous results.

Json code{

"user": {

"name": "John",

"age": 30,

}

Best Practices in Generating Synthetic Data for Negative Testing

1. Mind Map Your Scenarios 🧠

A well-structured mind map can help identify and categorize different types of negative tests. Break down test cases into primary categories like Invalid Data Types, Malformed Requests, and Boundary Values.

- Invalid Data Types

- String in place of integer (e.g., “Thirty” for age)

- Object instead of array

- Malformed Requests

- Missing fields

- Incorrectly nested JSON objects

- Boundary Value Tests

- Entering values slightly above or below expected range

Here’s an example mind map layout that can be a helpful guide for visualizing edge cases:

2. Define Your Workflow with Flowcharts 📊

Flowcharts add clarity, ensuring each data scenario is created systematically and thoroughly. For example:

- Input: Define the type of data anomaly (e.g., special characters in a text field).

- Process: Outline steps to generate data (e.g., “Inject special characters like

#@$%^into name fields”). - Output: Expected system behavior (e.g., error message or sanitized output).

This chart can be your reference point in aligning synthetic data with expected application behavior.

3. Automate Data Generation with Scripts and Configs ⚙️

Use Python, JavaScript, or specialized tools like Faker and JSON Schema Faker to automate the generation of synthetic data:

Python code, example for generating synthetic data with Faker

from faker import Faker

fake = Faker()

synthetic_data = {

"name": fake.text(), # Random text to potentially break character constraints

"age": fake.random_int(-10, 110) # Values outside typical age range

}

Tip: Automating this process helps save time and ensures consistency across tests.

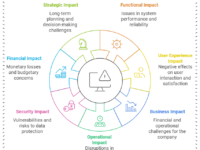

Pitfalls of Synthetic Data Generation in 2024

1. Overfitting Synthetic Data to Expected Outcomes

When synthetic data aligns too well with pre-set negative conditions, you risk testing only what you expect. This limits exploration. Balance is key – aim to create test cases that challenge assumptions and uncover real-world risks.

2. Complexity in Randomization

Sometimes randomness can lead to unhelpful or impractical test cases. For instance, if an address field populates with nonsensical input every time, your test results may be misleading. Setting controlled randomization parameters can improve accuracy.

3. High Maintenance Costs

When changes are made in production, your synthetic data sets might need updates to stay relevant. Additionally, negative test cases can sometimes require tailored datasets that are costly in both time and computational resources.

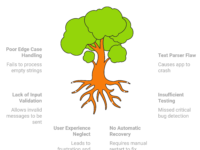

Practical Bottlenecks in the Process 🚧

Bottleneck #1: Testing Complex API Workflows with Nested JSON

Negative testing a REST API with synthetic data can become convoluted, especially when nested JSON objects come into play. An API expecting a structured JSON payload might fail unpredictably if the input is randomly broken at deeper levels, like:

{

"user": {

"details": { "first_name": "A very looooooong name that could break constraints" }

}

}

Solution: Limit negative inputs at deeper levels or create separate test cases specifically for nested structures.

Bottleneck #2: Data Compliance Issues

Synthetic data often skirts close to sensitive data boundaries, especially in regulated industries. Misuse of synthetic data in financial applications, for example, could lead to data compliance issues, even if it’s generated. This is where anonymized datasets, sometimes provided by dedicated vendors, come into play.

“Testing shows the presence, not the absence of bugs.” — Edsger Dijkstra

Wrapping Up: Synthetic Data in a Real-World Context 🌐

Synthetic data generation for negative testing is valuable, but it requires a tailored, methodical approach. By focusing on invalid formats, boundary values, and malformed inputs, testers can systematically uncover application weaknesses. In a fast-paced 2024 development cycle, synthetic data generation helps us keep up with quality demands, provided we’re aware of the trade-offs.

Testing isn’t only about finding what works; it’s about ensuring things break well when they’re supposed to.

An interesting post about Boundaries Unbounded.