Where AI Testing and Ethics Intersect

As AI technology is getting integrated into testing, it is still early to get the best efficiency, scalability, and accuracy. And like many others working daily with these tools, I’ve seen firsthand that AI-driven testing also introduces ethical dilemmas that can’t be ignored. From unintentional biases to data privacy, the ethical implications of AI call for more than technical skill—they demand critical, context-driven thinking. This article explores the challenges and offers practical insights for testers, inspired by real-world testing scenarios and the principles I apply in my daily work.

The Ethics of Bias in AI: Not Just a Data Issue

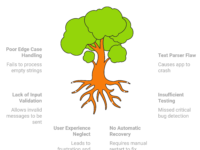

“Bias” is a word we often hear in AI discussions, yet in testing, it’s more than an abstract concept. When we train AI on biased data, that bias seeps into every aspect of testing. Consider a model trained primarily on data from North American users—it may perform well for them but fail miserably when tested with users from different regions. This bias can create inconsistent user experiences and impact the product’s reach and acceptance.

Practical Example: Regional Bias in an E-Commerce App

Let’s say an e-commerce app primarily uses data from English-speaking customers. The AI may optimize the app’s search and filtering features for English-language queries, unintentionally marginalizing users who search in other languages. This oversight impacts accessibility, reducing user satisfaction and potentially alienating entire user demographics.

Visual Guide: How Bias Enters the AI Testing Workflow

To understand where bias can emerge, here’s a mind map showing critical points in the AI testing lifecycle where bias can seep in:

Bias in AI-Driven Testing

|

---------------------------------------------------

| | |

Historical Data Training Data Model Interpretation

Bias Bias Bias

| | |

Legacy biases Over-representation Misinterpreted results,

in data or lack of certain groups affecting user experience

of diversity

Transparency and the Black Box Dilemma

In traditional testing, we understand the “why” behind each decision or action. But with AI, we face the “black box” problem—algorithms can yield results without clear explanations. This lack of transparency complicates trust and accountability. Without knowing how an AI model arrived at its conclusions, testers are left questioning its reliability and fairness.

Solution – Embrace Explainable AI (XAI)

Explainable AI, or XAI, provides insights into AI’s decision-making processes, offering tools like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations). For instance, LIME lets testers see which data features were most influential in a particular decision, giving valuable insight into potential biases and improving trust.

The Job Displacement Dilemma: Automation Meets Human Expertise

AI’s efficiency raises another ethical question—job displacement. Automation can streamline repetitive tasks, which may reduce the demand for manual testing roles. While this can be unsettling, I’ve observed that AI does not replace the human context or critical thinking we bring to testing. Instead, it shifts our role, demanding new skills like data interpretation, model training, and ethics management.

Tip: Upskill for Ethical and Analytical Roles

Instead of fearing AI, embrace it. Testers who learn to analyze AI decisions, spot ethical red flags, and provide nuanced judgment will remain invaluable. Focus on developing a blend of technical and ethical skills, including data literacy, model interpretability, and ethical AI practices.

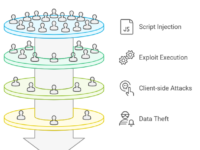

A Bottleneck in Data Privacy

One of the most pressing concerns in AI-driven testing is data privacy. Many testing AI models require large datasets, often involving sensitive user information. Without strict measures, data privacy could be compromised, leading to severe regulatory consequences and eroding customer trust.

Practical Solution: Synthetic Data Generation

Synthetic data allows testers to create artificial datasets that mirror real user data, maintaining privacy while ensuring models have the information they need. In testing a healthcare app, we generated synthetic patient data to avoid using real, sensitive information. This way, we safeguarded privacy while ensuring the testing was still effective.

Table: Ethical Challenges and Practical Solutions in AI-Driven Testing

| Ethical Challenge | Impact on Testing | Solution/Technique |

|---|---|---|

| Bias in Data | Skews test results; misses diverse perspectives | Ensure data diversity; employ XAI tools |

| Transparency Issues | Reduces tester trust in AI decisions | Use XAI methods (e.g., LIME, SHAP) for insight |

| Job Displacement | Shifts demand for manual testing | Upskill for AI oversight roles in testing |

| Privacy and Data Use | Risk of data breaches, compliance issues | Apply synthetic data generation techniques |

The Role of Ethical Guidelines in Testing

The ethical challenges we face are ongoing, and a structured framework can provide much-needed guidance. In our testing team, we use ethical checklists as part of every AI-driven test plan. These checklists include questions like:

- “Does this dataset reflect our target user demographics fairly?”

- “Are there any unexplained results from the AI model that need further scrutiny?”

- “Have we ensured that user data privacy is fully protected?”

These questions prompt us to rethink AI tools and ensure our testing meets ethical standards.

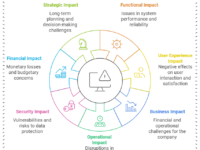

Multiple Viewpoints on AI in Testing

Business Perspective 🏢

For businesses, AI-driven testing can reduce costs and expedite time-to-market. However, ignoring ethical risks could lead to legal repercussions and a loss of user trust. In a competitive landscape, businesses need to weigh these benefits against the potential long-term costs of ethical breaches.

Tester and Practitioner Viewpoint 👨💻

Testers bring an irreplaceable human touch to AI-driven testing. By focusing on AI’s ethical implications and using a critical, context-driven approach, testers can serve as a bridge between technology and user values. This unique role protects user interests and maintains testing integrity.

Additional Resources and Learning Links

For further reading, here are some valuable resources on ethics in AI testing:

- Ethics of AI in Software Testing – Stanford Encyclopedia of Philosophy

- Bias in AI and Machine Learning – An in-depth exploration of bias in AI

- XAI Techniques: LIME and SHAP Explained – Towards Data Science article

Final Thoughts: Ethics as a Continuous Journey in AI Testing

AI in testing offers unmatched advantages, but with these gains come responsibilities we must not ignore. Ethical considerations like bias, transparency, job impact, and privacy are not peripheral—they’re central to the future of trustworthy testing. As AI advances, testers have an essential role in ensuring these tools reflect the diversity and values of the users they serve.